Async API with API Gateway and the SQS Alternative

![]()

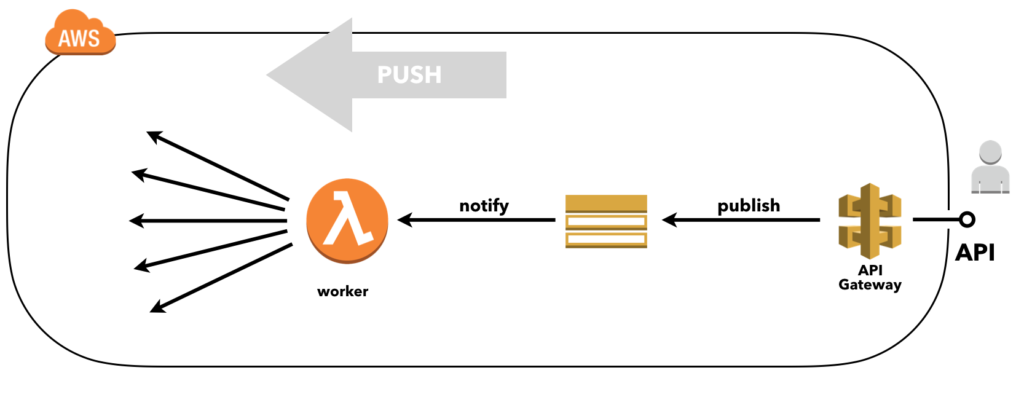

In the previous blog post, we showed how we can create an async API by configuring API Gateway to transform and publish requests directly to Amazon Simple Notification Service (SNS). The great thing about SNS is that SNS can trigger a Lambda function by publishing messages to topics. These functions act as worker to do whatever we want.

While this does create an API with a asynchronous nature by decoupling the reply from the initial HTTP request using SNS, using a push-based delivery mechanism may not be what you need..

‘push-based delivery example’

SNS Limitations

If SNS is right for your situation depends on whether a) the maximum throughput of the downstream dependencies (which typically exist due to database connection limits, external web services request throttling limits, etc.) is likely to be reached under peak conditions, and b) this peak lasts long enough for retries to exhaust. This blog does a great job explaining the theory with nice graphs.

So looking at our API, when there are many concurrent requests on our API this results in many Lambda’s being triggered concurrently. These functions are going to hit the downstream dependencies and if we encounter the limits of one of these dependencies then Lamda’s will start failing and causing retries that may exhaust.

Long story short, SNS is a great option unless when it is not 😉

The SQS Alternative

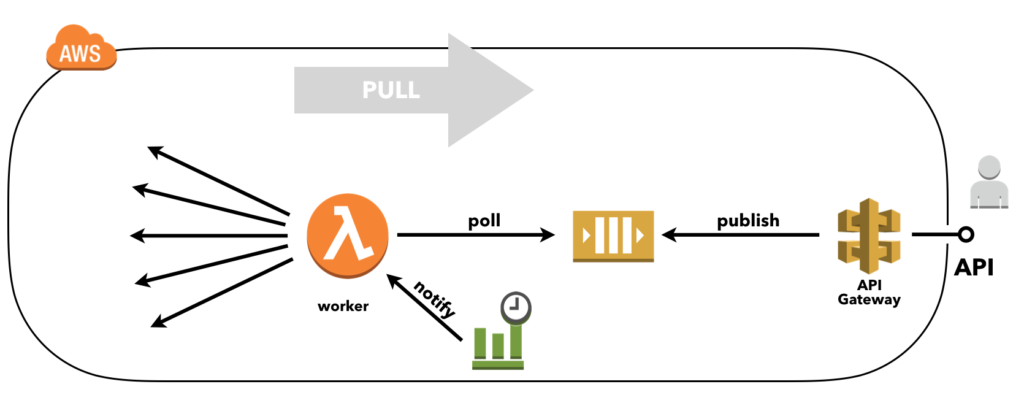

Alternatively, a true message queue can be used, such as Amazon Simple Queue Service (SQS). This is a pull-based delivery mechanism which will allow components to poll and process messages at their own pace, instead of getting overloaded with and failing eventually.

‘pull-based delivery example’

To configure an API Gateway to act as a ‘service proxy’ in front of SQS is, just like with SNS, easy once you know how it’s done. These API Gateway integration configurations are difficult to get right and the documentation is again lacking. So just like we did with the SNS solution, we’ll document it here for future reference.

Solution using CloudFormation

First we declare the SQS queue.

ExampleQueue: Type: AWS::SQS::Queue Properties: QueueName: !Sub "${AWS::StackName}-example-queue"

Next, we configure an API resource (/example) accepting POST operations that responds with a 202 Accepted. And we use the API Gateway extensions to Swagger to create AWS specific integrations.

The uri is a special ARN that expresses that we want to go from ‘apigateway’ to ‘sqs’ and specifically to the queue referred to in ‘path/<AWS ACCOUNT-ID/QUEUE-NAME>’ . This is what API Gateway needs to select the appropriate endpoint for what turns out to be an HTTP-based integration.

The SQS endpoint expects an Action with value “SendMessage” denoting the operation to perform and a MessageBody with the payload as query parameters, so we map those accordingly. Note that this requires moving the payload from our request body in the original HTTP request to this query parameter called ‘MessageBody’ in the request for the SQS endpoint.

Another thing that the SQS endpoint expects is that we include the Content-Type HTTP header with the value set to 'application/x-www-form-urlencoded', otherwise it will not work.

ExampleAPI: Type: "AWS::Serverless::Api" Properties: StageName: "prod" DefinitionBody: swagger: "2.0" info: title: !Sub "${AWS::StackName}-api" paths: /example: post: responses: "202": description: Accepted x-amazon-apigateway-integration: type: "aws" httpMethod: "POST" uri: !Sub "arn:aws:apigateway:${AWS::Region}:sqs:path/${AWS::AccountId}/${ExampleQueue.QueueName}" credentials: !GetAtt ExampleQueueAPIRole.Arn requestParameters: integration.request.header.Content-Type: "'application/x-www-form-urlencoded'" requestTemplates: application/json: "Action=SendMessage&MessageBody=$input.json('$')" passthroughBehavior: "NEVER" responses: default: statusCode: 202

The ‘credentials’ are provided using the role below. This allows API Gateway to add messages to the queue we created earlier.

ExampleQueueAPIRole: Type: "AWS::IAM::Role" Properties: AssumeRolePolicyDocument: Version: "2012-10-17" Statement: - Effect: "Allow" Principal: Service: "apigateway.amazonaws.com" Action: - "sts:AssumeRole" Policies: - PolicyName: !Sub "${AWS::StackName}-example-queue-policy" PolicyDocument: Version: "2012-10-17" Statement: - Action: "sqs:SendMessage" Effect: "Allow" Resource: - !GetAtt ExampleQueue.Arn ManagedPolicyArns: - "arn:aws:iam::aws:policy/service-role/AmazonAPIGatewayPushToCloudWatchLogs"

With the SNS example we finished with configuring the Lambda acting as our worker. Unfortunately, SQS is not one of the supported event sources for Lambda.

Pull-based worker

In order to consume from SQS you need to create some online process that polls for new messages at a given interval, for example using a virtual machine or Docker container. Or, if you don’t want to maintain infrastructure and want to go ‘serverless’ you could trigger a Lambda function from some other source, such as a CloudWatch Events rule that triggers on a regular schedule, just like in the example above.

A working example (with deployment scripts, but without a worker consuming SQS) can be found here.

UPDATE 23/07/2018

Since the end of June, AWS supports SQS as first-class event sources for Lambda! The use of CloudWatch events to trigger polling at a certain interval can now be omitted. See https://aws.amazon.com/blogs/aws/aws-lambda-adds-amazon-simple-queue-service-to-supported-event-sources/

If you read the blog announcing the feature you’ll learn that AWS basically does the ‘undifferentiated heavy lifting’ of polling the queue and triggering the Lambda when a message is present. By monitoring the number of inflight messages and detecting if this number is trending up or down, the polling frequency is altered dynamically. So AWS provides us with a solution which is still pull-based due to the nature of queues, but that can automatically scale up to higher throughput when the queue is being used heavily while keeping polling frequency as low as possible. This is important because polling does happen and you will be charged for the polling requests!